Glossary

1. Introduction. State of art

2. Aim of this work

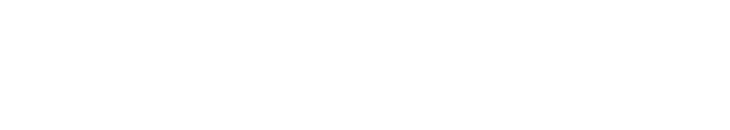

3. Methodology

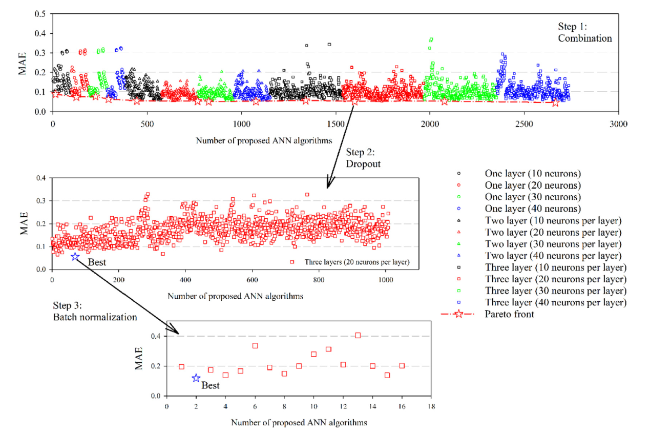

Fig. 1. Methodology employed to generate the ANN architecture. |

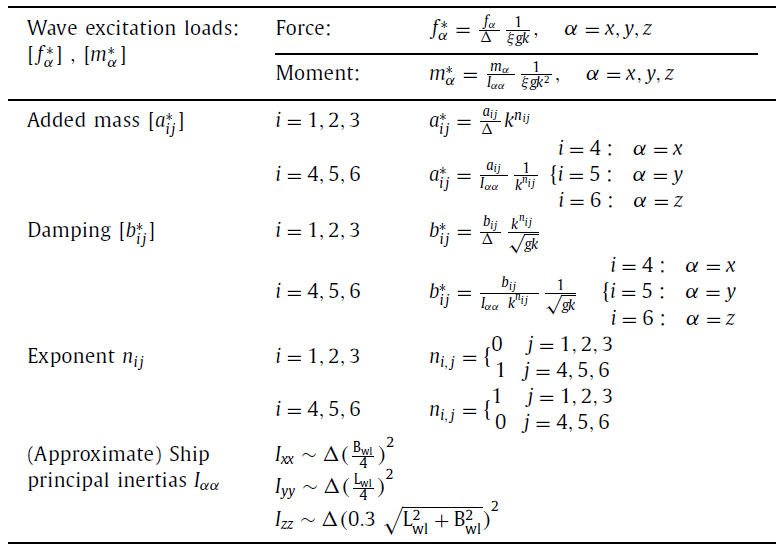

Table 1. Dimensionless seakeeping loads. |

|

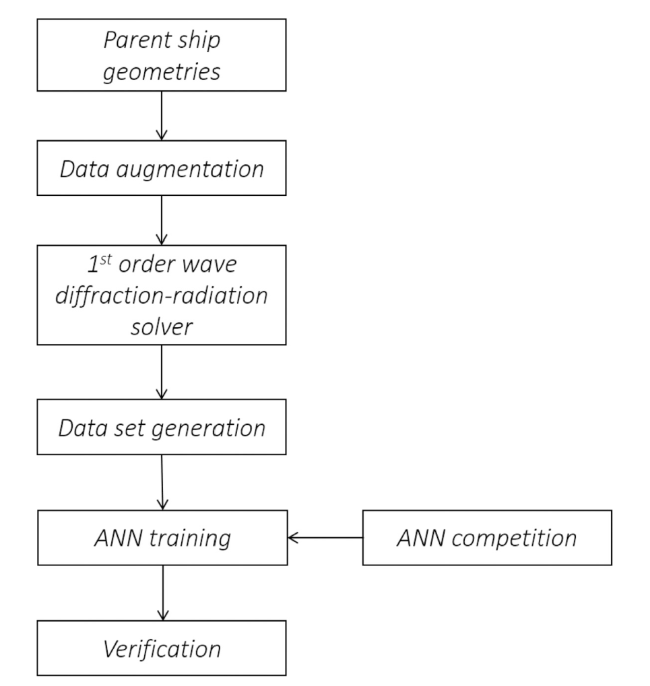

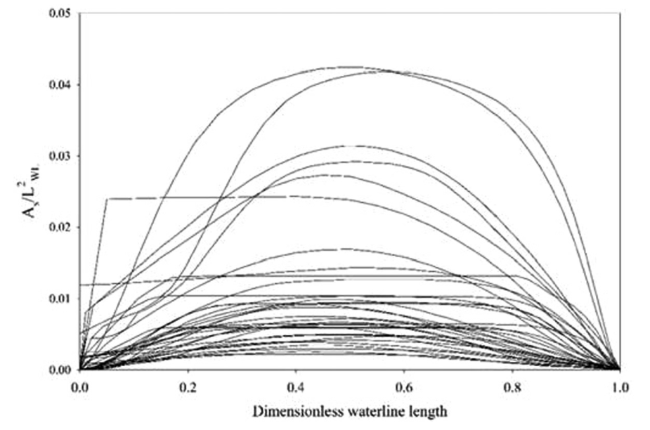

4. Data mining and simulation

4.1. Generating the dataset

Fig. 2. Dimensionless cross-sectional area for each parent geometry. |

Table 2. Range of the dimensionless form coefficients for the parent ships. |

| Form coefficients | Range |

|---|---|

| Block coefficient Cb | 0.331-0.891 |

| waterplane coefficient Cwl | 0.625-0.946 |

| Midship coefficient Cm | 0.475-0.995 |

| Prismatic coefficient Cp | 0.522-0.901 |

| XB/Lwl | 0.391-0.554 |

| ZB/Lwl | −0.485-−0.276 |

Fig. 3. Left: Amidship (Ac), midship (Am), and waterplane (Awl) sections of a ship. Right: Dataset representation based on sectional area ratios. |

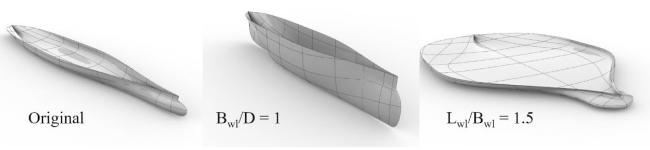

Fig. 4. Extreme parametric variation of a ship geometry. |

4.2. Seakeeping simulation particulars

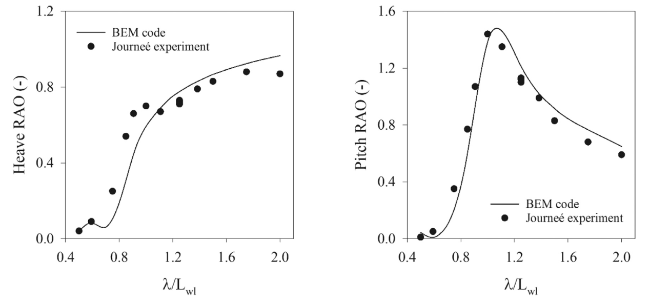

Fig. 5. Comparison between BEM code and results for Wigley III Journeé’s experimentation Fr = 0.2. |

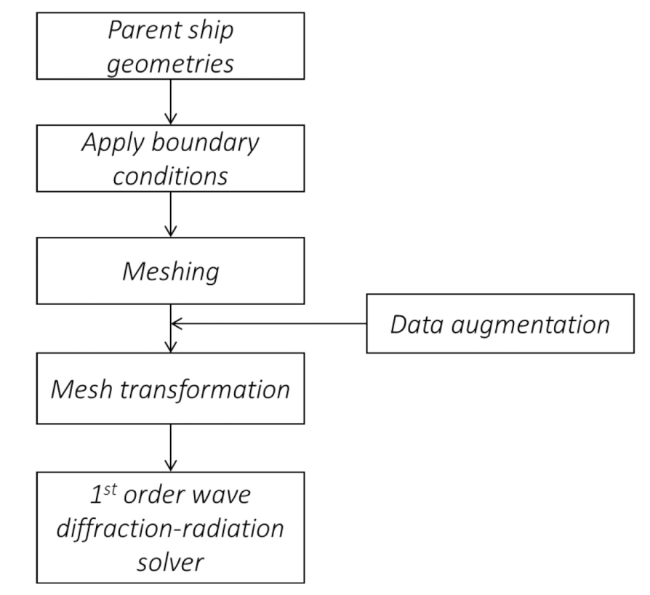

Fig. 6. Workflow for the seakeeping numerical simulation of the dataset of ships. |

Table 3. Mesh sensitivity analysis for container ship hull form. |

| Mesh diff. | Original | B/D = 1 | L/B = 1.5 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Aii (%) | Bii (%) | fi (%) | mi (%) | Aii (%) | Bii (%) | fi (%) | mi (%) | Aii (%) | Bii (%) | fi (%) | mi (%) | |

| M 2 - M 1 | 1.41 | 2.39 | 0.45 | 0.10 | 0.13 | 0.59 | 0.13 | 0.00 | 2.20 | 2.86 | 6.90 | 0.73 |

| M 3 - M 2 | 0.94 | 0.32 | 0.53 | 0.03 | 0.76 | 2.52 | 0.41 | 0.03 | 9.26 | 7.62 | 7.02 | 0.80 |

| M 4 - M 3 | 1.00 | 0.76 | 0.10 | 0.01 | 0.17 | 0.33 | 0.03 | 0.00 | 8.67 | 5.96 | 7.92 | 0.82 |

| M 5 - M 4 | 0.64 | 0.45 | 0.21 | 0.00 | 0.54 | 1.38 | 0.14 | 0.00 | 1.47 | 2.13 | 1.01 | 0.11 |

| M 6 - M 5 | 0.15 | 0.15 | 0.09 | 0.00 | 0.17 | 0.68 | 0.09 | 0.01 | 0.57 | 0.63 | 1.06 | 0.10 |

| M 7 - M 6 | 0.35 | 0.17 | 0.06 | 0.00 | 0.09 | 0.16 | 0.00 | 0.00 | 0.39 | 0.52 | 0.65 | 0.07 |

| M 8 - M 7 | 0.76 | 0.35 | 0.21 | 0.02 | 0.52 | 0.67 | 0.13 | 0.00 | 1.16 | 1.55 | 2.15 | 0.18 |

Table 4. Mesh sensitivity analysis for cruise ship hull form. |

| Mesh diff. | Original | B/D = 1 | L/B = 1.5 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Aii (%) | Bii (%) | fi (%) | mi (%) | Aii (%) | Bii (%) | fi (%) | mi (%) | Aii (%) | Bii (%) | fi (%) | mi (%) | |

| M 2 - M 1 | 0.76 | 0.33 | 1.01 | 0.02 | 0.10 | 0.05 | 0.03 | 0.00 | 2.48 | 0.66 | 0.12 | 0.01 |

| M 3 - M 2 | 7.60 | 0.79 | 1.58 | 0.11 | 3.38 | 18.54 | 1.69 | 0.14 | 8.77 | 9.16 | 19.44 | 2.26 |

| M 4 - M 3 | 0.40 | 0.18 | 0.30 | 0.01 | 0.15 | 0.08 | 0.01 | 0.00 | 0.74 | 0.26 | 0.10 | 0.00 |

| M 5 - M 4 | 7.16 | 2.27 | 2.09 | 0.41 | 0.28 | 0.42 | 0.10 | 0.00 | 0.81 | 7.58 | 7.96 | 0.90 |

| M 6 - M 5 | 2.71 | 1.44 | 2.02 | 0.27 | 0.20 | 0.31 | 0.04 | 0.00 | 1.26 | 7.43 | 4.89 | 0.38 |

| M 7 - M 6 | 5.57 | 1.47 | 1.78 | 0.26 | 0.04 | 0.14 | 0.02 | 0.00 | 2.13 | 2.30 | 2.41 | 0.15 |

| M 8 - M 7 | 2.99 | 0.50 | 0.57 | 0.11 | 0.41 | 0.42 | 0.09 | 0.00 | 2.35 | 1.54 | 1.75 | 0.08 |

Table 5. Mesh sensitivity analysis for tanker ship hull form. |

| Mesh diff. | Original | B/D = 1 | L/B = 1.5 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Aii (%) | Bii (%) | fi (%) | mi (%) | Aii (%) | Bii (%) | fi (%) | mi (%) | Aii (%) | Bii (%) | fi (%) | mi (%) | |

| M 2 - M 1 | 0.32 | 0.20 | 0.11 | 0.00 | 2.01 | 0.71 | 0.09 | 0.00 | 0.36 | 2.36 | 0.34 | 0.04 |

| M 3 - M 2 | 0.33 | 0.13 | 0.06 | 0.00 | 0.02 | 0.10 | 0.02 | 0.00 | 0.08 | 0.06 | 0.03 | 0.00 |

| M 4 - M 3 | 0.05 | 0.14 | 0.07 | 0.00 | 0.18 | 0.07 | 0.01 | 0.00 | 0.06 | 0.55 | 0.13 | 0.02 |

| M 5 - M 4 | 0.56 | 0.20 | 0.06 | 0.00 | 0.05 | 0.17 | 0.01 | 0.00 | 0.12 | 0.03 | 0.02 | 0.00 |

| M 6 - M 5 | 0.09 | 0.06 | 0.05 | 0.00 | 0.08 | 0.08 | 0.03 | 0.00 | 0.11 | 0.31 | 0.05 | 0.00 |

| M 7 - M 6 | 0.23 | 0.21 | 0.15 | 0.02 | 0.10 | 0.17 | 0.05 | 0.01 | 0.13 | 0.38 | 0.19 | 0.02 |

| M 8 - M 7 | 0.10 | 0.10 | 0.01 | 0.00 | 0.18 | 0.03 | 0.01 | 0.00 | 0.06 | 0.30 | 0.06 | 0.01 |

Table 6. Mesh sensitivity analysis for frigate ship hull form. |

| Mesh diff. | Original | B/D = 1 | L/B = 1.5 | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Aii (%) | Bii (%) | fi (%) | mi (%) | Aii (%) | Bii (%) | fi (%) | mi (%) | Aii (%) | Bii (%) | fi (%) | mi (%) | |

| M 2 - M 1 | 6.46 | 2.61 | 1.29 | 0.07 | 1.79 | 2.79 | 0.73 | 0.05 | 5.34 | 8.16 | 2.76 | 0.20 |

| M 3 - M 2 | 3.30 | 0.37 | 0.45 | 0.03 | 0.49 | 0.20 | 0.09 | 0.00 | 0.60 | 0.34 | 0.58 | 0.06 |

| M 4 - M 3 | 0.20 | 0.12 | 0.16 | 0.00 | 0.24 | 0.25 | 0.10 | 0.00 | 0.07 | 0.14 | 0.15 | 0.01 |

| M 5 - M 4 | 0.47 | 0.60 | 0.24 | 0.01 | 0.19 | 0.08 | 0.04 | 0.00 | 0.27 | 0.14 | 0.36 | 0.03 |

| M 6 - M 5 | 0.60 | 0.22 | 0.29 | 0.01 | 0.55 | 0.41 | 0.19 | 0.01 | 0.16 | 0.19 | 0.19 | 0.02 |

| M 7 - M 6 | 0.15 | 0.10 | 0.04 | 0.00 | 0.02 | 0.01 | 0.01 | 0.00 | 0.04 | 0.02 | 0.06 | 0.01 |

| M 8 - M 7 | 0.55 | 0.31 | 0.11 | 0.01 | 0.36 | 0.54 | 0.07 | 0.00 | 0.08 | 0.07 | 0.17 | 0.01 |

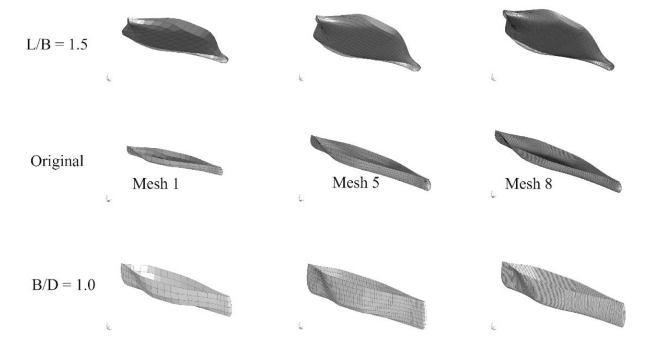

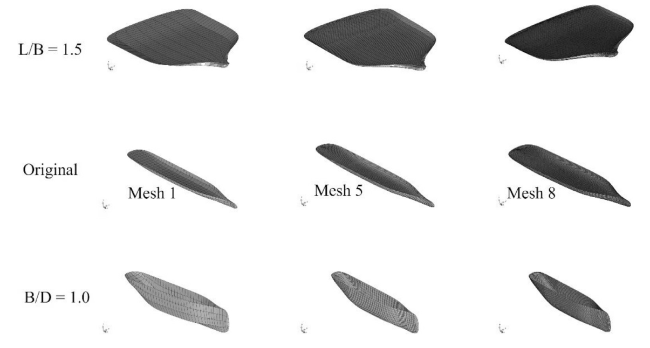

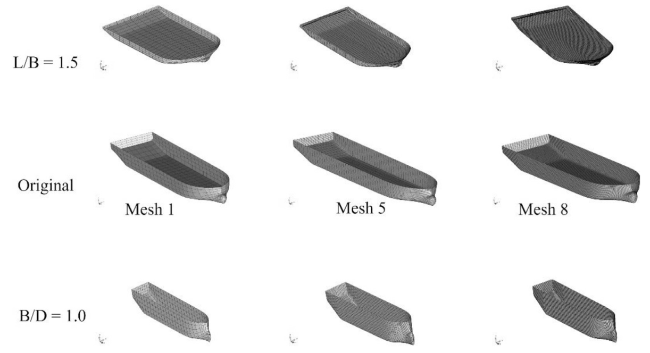

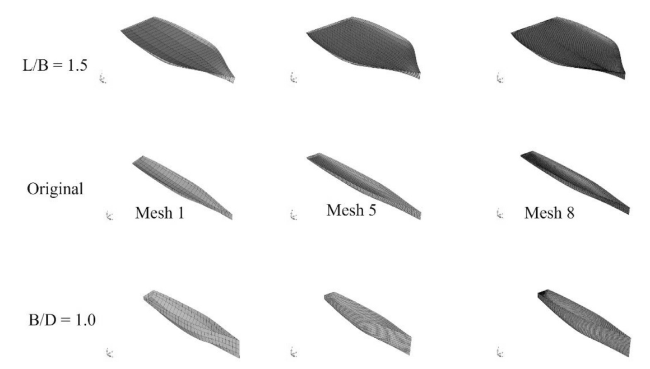

Fig. 7. Caption with three different meshes of a container parent ship. |

Fig. 8. Caption with three different meshes of a cruise parent ship. |

Fig. 9. Caption with three different meshes of a tanker parent ship. |

Fig. 10. Caption with three different meshes of a frigate parent ship. |

4.3. ANN inputs and targeted outputs

5. Predictive algorithm

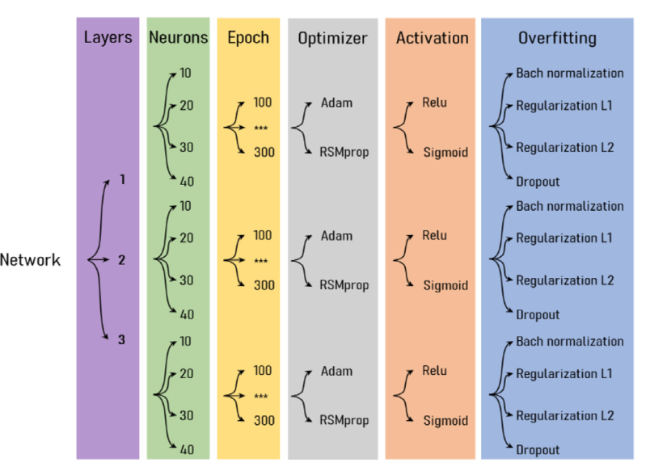

Table 7. Parametric variation of ANN architecture hyper-parameters. |

| Hyper-parameter | Variation |

|---|---|

| Number of hidden layers | 1-2-3 |

| Number of neurons per hidden layer | 10-20-30-40 |

| Optimization algorithms | Adam [37] RMSprop [38] models |

| Activation functions for each layer | Sigmoid [39] ReLU [40,41] |

| Number of training epochs | ∈ [100, 300] |

| Overfitting prevention | Batch Normalization [42] Dropout [43,44] Regularization functions L1 [45] Regularization function L2 [46] |

Fig. 11. Graphic representation for the ANN architectures generated by parametric variations of hyper-parameters. |

Fig. 12. MAE versus number of neurons and layers. |

6. Numerical assessment and computational performance

6.1. Numerical assessment

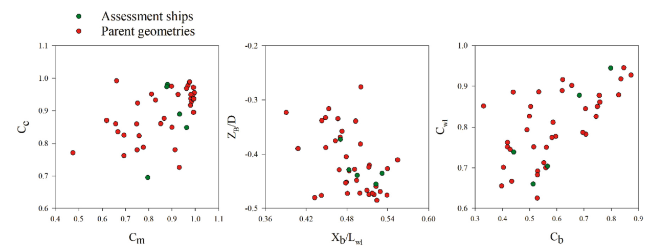

Fig. 13. Dimensionless ship form parameters for the parent ships used for the trainings and the five assessment ships. |

Table 8. Main dimensionless hull parameters for the five assessment ships. |

| Assessment test | Typology | $ \frac{\mathrm{B}_{\mathrm{wl}}}{\mathrm{L}_{\mathrm{wl}}} $ | $ \frac{D}{\mathrm{~L}_{\mathrm{wl}}} $ | Cb | Cwl | Cm | Cp | $ \frac{\mathrm{X}_{\mathrm{B}}}{\mathrm{L}_{\mathrm{wl}}} $ | $ \frac{Z_{B}}{D} $ |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Anchor Handling | 0.286 | 0.100 | 0.683 | 0.878 | 0.963 | 0.710 | 0.495 | −0.439 |

| 2 | Landing craft | 0.318 | 0.036 | 0.798 | 0.945 | 0.933 | 0.855 | 0.523 | −0.455 |

| 3 | Trawler | 0.257 | 0.060 | 0.513 | 0.660 | 0.881 | 0.582 | 0.483 | −0.429 |

| 4 | Yacht | 0.230 | 0.034 | 0.441 | 0.739 | 0.796 | 0.554 | 0.470 | −0.372 |

| 5 | Container ship | 0.140 | 0.048 | 0.566 | 0.704 | 0.878 | 0.646 | 0.532 | −0.435 |

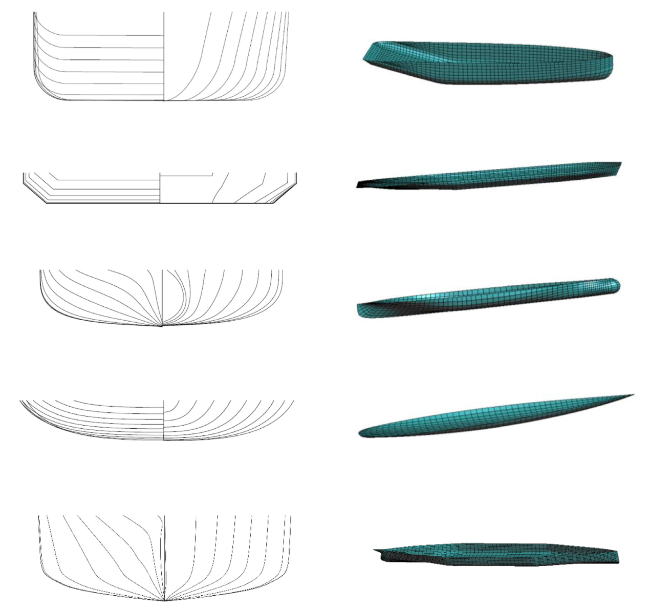

Fig. 14. Hull shapes below waterline for the five tests. Left: Body plan; Right: 3D view. |

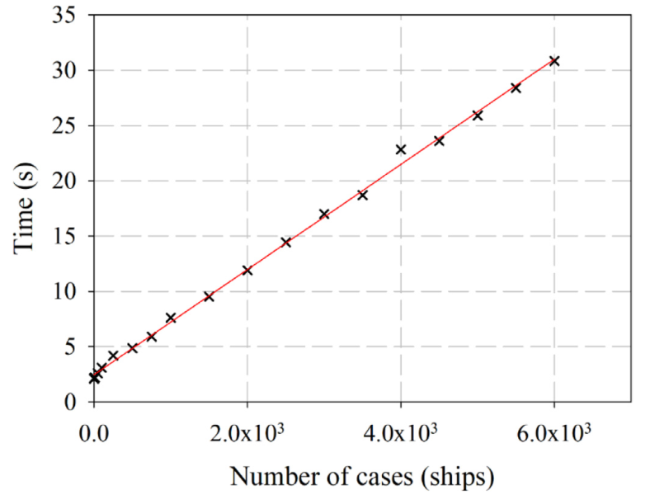

6.2. Computational performance

Fig. 15. Computational time in seconds versus number of cases computed. |

7. Results discussion

Table 9. Normalized relative mean error for predicted dimensionless added masses. |

| MNRE (%) | a11* | a22* | a33* | a44* | a55* | a66* | Average |

|---|---|---|---|---|---|---|---|

| Anchor Handling | 0.35 | 4.44 | 4.45 | 4.34 | 4.17 | 3.26 | 3.50 |

| Landing craft | 0.58 | 1.13 | 1.47 | 12.02 | 3.40 | 1.58 | 3.36 |

| Trawler | 0.20 | 4.01 | 3.18 | 4.62 | 3.18 | 4.84 | 3.34 |

| Yacht | 0.19 | 4.14 | 4.21 | 6.05 | 1.68 | 0.97 | 2.87 |

| Container ship | 0.24 | 5.50 | 5.33 | 5.99 | 7.25 | 3.93 | 4.71 |

| Average | 0.31 | 3.84 | 3.73 | 6.61 | 3.94 | 2.92 | 3.56 |

Table 10. Normalized relative mean error for predicted dimensionless dampings. |

| MNRE (%) | b11* | b22* | b33* | b44* | b55* | b66* | Average |

|---|---|---|---|---|---|---|---|

| Anchor Handling | 0.38 | 6.07 | 1.26 | 0.73 | 3.93 | 6.77 | 3.19 |

| Landing craft | 0.23 | 1.87 | 5.89 | 13.14 | 6.40 | 1.04 | 4.76 |

| Trawler | 0.43 | 3.75 | 1.76 | 2.27 | 4.77 | 5.16 | 3.02 |

| Yacht | 0.24 | 1.04 | 5.25 | 7.05 | 7.12 | 0.44 | 3.52 |

| Container ship | 0.31 | 2.44 | 3.95 | 3.28 | 4.10 | 1.84 | 2.65 |

| Average | 0.32 | 3.03 | 3.62 | 5.30 | 5.27 | 3.05 | 3.43 |

8. Conclusions

Declaration of Competing Interest

Appendix A

Table A1. Parent ships. |

| Number of ships | Type | L/B | B/D | Cb | Fr |

|---|---|---|---|---|---|

| 1 | Cargo Ship | 4.492 | 2.500 | 0.56 | 0.322 |

| 2 | Tugboat | 3.233 | 2.284 | 0.50 | 0.454 |

| 3 | Bulk carrier | 6.753 | 2.311 | 0.75 | 0.171 |

| 4 | Bulk carrier | 6.389 | 3.852 | 0.74 | 0.186 |

| 5 | Container ship | 6.644 | 2.538 | 0.55 | 0.275 |

| 6 | Container ship | 6.235 | 4.125 | 0.53 | 0.259 |

| 7 | Supply vessel | 4.013 | 2.839 | 0.53 | 0.610 |

| 8 | Supply vessel | 4.757 | 4.457 | 0.33 | 0.652 |

| 9 | Cruise ship | 8.432 | 4.386 | 0.60 | 0.191 |

| 10 | Cruise ship | 7.825 | 6.351 | 0.58 | 0.238 |

| 11 | Tanker ship | 5.951 | 2.714 | 0.87 | 0.131 |

| 12 | Drill ship | 5.951 | 4.750 | 0.84 | 0.153 |

| 13 | FPSO | 5.858 | 4.521 | 0.83 | 0.129 |

| 14 | harbor tug | 2.922 | 2.607 | 0.62 | 0.342 |

| 15 | harbor tug | 2.362 | 3.861 | 0.66 | 0.307 |

| 16 | Heave lift ship | 3.657 | 5.556 | 0.85 | 0.158 |

| 17 | Patrol vessel | 5.902 | 2.855 | 0.49 | 0.474 |

| 18 | Patrol vessel | 6.156 | 4.503 | 0.40 | 0.452 |

| 19 | Cargo vessel | 5.860 | 2.250 | 0.71 | 0.240 |

| 20 | Cargo vessel | 5.520 | 3.374 | 0.71 | 0.304 |

| 21 | Frigate | 6.347 | 3.159 | 0.51 | 0.514 |

| 22 | Frigate | 6.003 | 6.022 | 0.40 | 0.541 |

| 23 | Ro-On Ro-Off | 7.063 | 4.127 | 0.53 | 0.234 |

| 24 | Supply vessel | 3.640 | 2.566 | 0.62 | 0.411 |

| 25 | Yacht | 4.806 | 2.947 | 0.42 | 0.325 |

| 26 | Yacht | 9.163 | 3.215 | 0.43 | 0.299 |

| 27 | Yacht | 6.253 | 3.165 | 0.42 | 0.457 |

| 28 | Benchmark hull | 10.000 | 1.587 | 0.43 | 0.250 |

| 29 | Cargo ship | 2.482 | 5.034 | 0.59 | 0.234 |

| 30 | Container ship | 3.644 | 3.703 | 0.53 | 0.265 |

| 31 | Container ship | 7.042 | 2.659 | 0.56 | 0.305 |

| 32 | Cargo vessel | 5.701 | 2.690 | 0.70 | 0.281 |

| 33 | Yacht | 3.526 | 2.304 | 0.44 | 0.346 |

| 34 | Cargo vessel | 6.667 | 2.312 | 0.76 | 0.220 |

| 35 | Yacht | 6.250 | 2.158 | 0.50 | 0.348 |

| 36 | LNG ship | 6.187 | 4.104 | 0.76 | 0.187 |

| 37 | Bulk carrier | 6.771 | 1.926 | 0.77 | 0.194 |

| 38 | Bulk carrier | 6.459 | 2.992 | 0.79 | 0.202 |

| 39 | Supply vessel | 4.077 | 3.455 | 0.47 | 0.681 |

| 40 | Container ship | 6.545 | 2.198 | 0.59 | 0.292 |

| 41 | Container ship | 6.185 | 5.077 | 0.61 | 0.309 |

| 42 | Drill ship | 5.838 | 3.455 | 0.87 | 0.138 |

| 43 | Drill ship | 6.326 | 5.833 | 0.89 | 0.160 |

| 44 | FPSO | 5.728 | 9.042 | 0.81 | 0.159 |

| 45 | harbor tug | 3.862 | 1.714 | 0.61 | 0.308 |

| 46 | Patrol vessel | 6.606 | 3.289 | 0.37 | 0.469 |

| 47 | Cargo vessel | 6.489 | 3.783 | 0.76 | 0.279 |

| 48 | Cargo vessel | 5.203 | 1.798 | 0.74 | 0.265 |

| 49 | Frigate | 6.389 | 4.174 | 0.45 | 0.523 |

| 50 | Frigate | 6.123 | 2.086 | 0.45 | 0.579 |

Appendix B

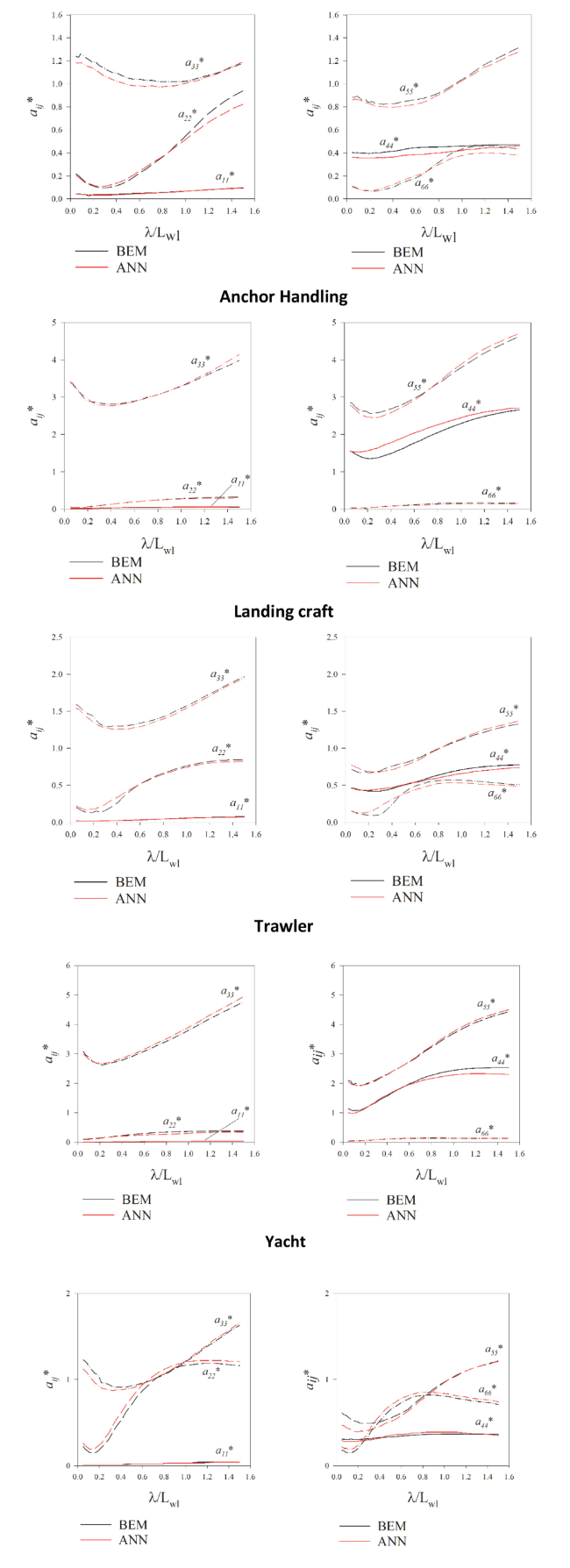

Fig. B.1. Added mass comparison between ANN and BEM for the five assessment ships. |

Appendix C

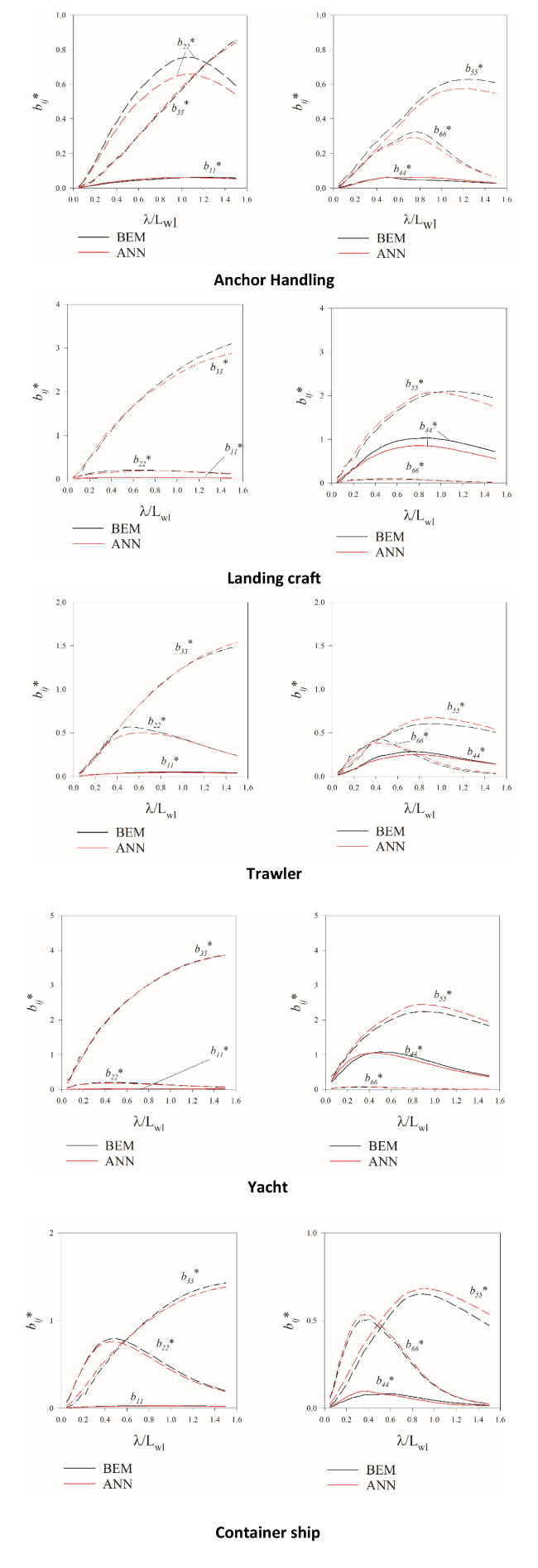

Fig. C.1. Damping comparison between ANN and BEM for the five assessment ships. |

Appendix D

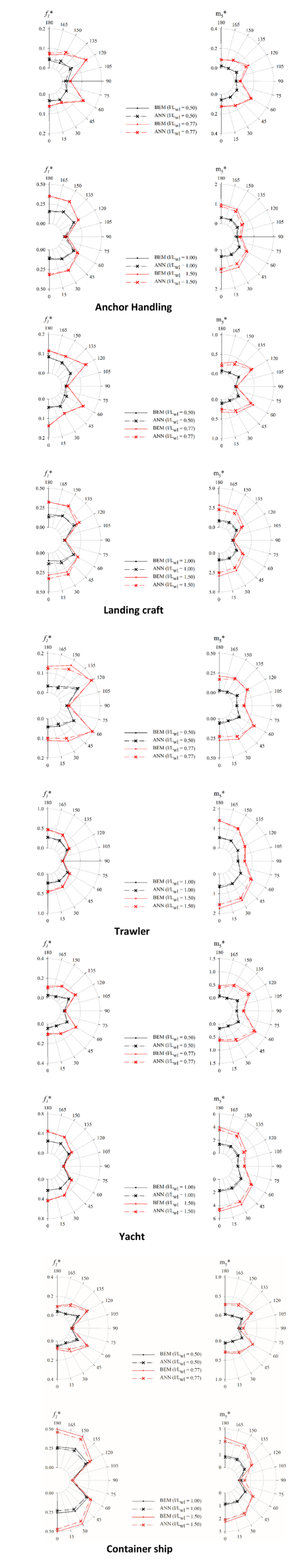

Fig. D.1. Wave excitation loads comparison between ANN and BEM for the five assessment ships. |

Appendix E

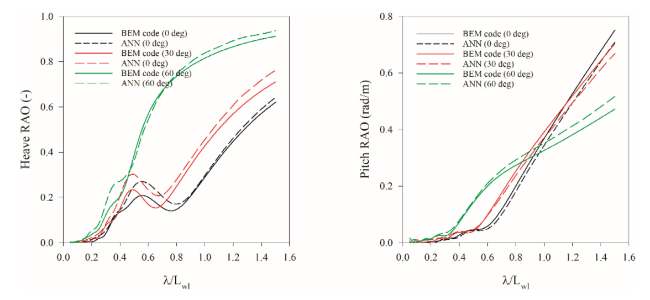

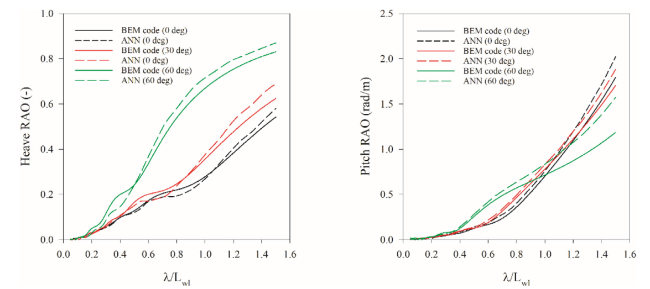

Fig. E.1. Heave and Pitch RAO comparisons between ANN and BEM for the container assessment ship. |

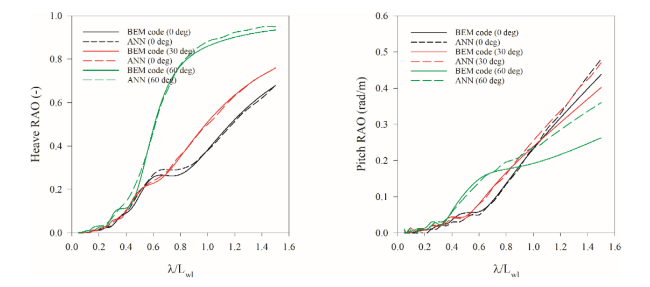

Fig. E.2. Heave and Pitch RAO comparisons between ANN and BEM for the yacht assessment ship. |

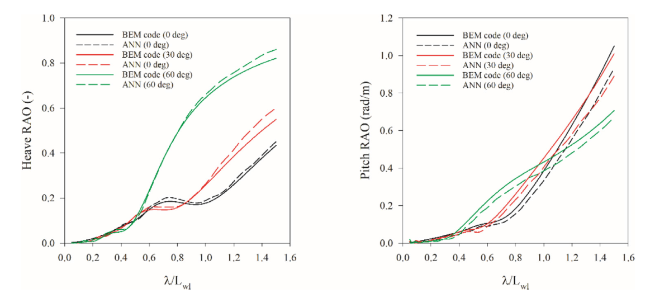

Fig. E.3. Heave and Pitch RAO comparisons between ANN and BEM for the Trawler assessment ship. |

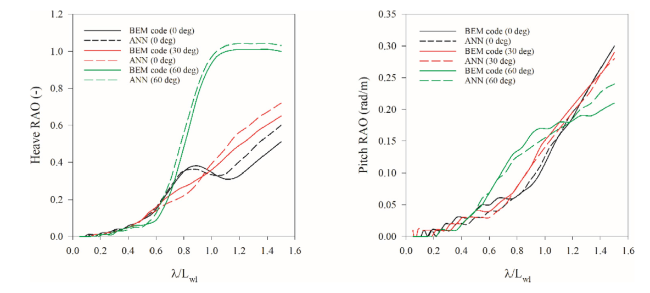

Fig. E.4. Heave and Pitch RAO comparisons between ANN and BEM for the Landing craft assessment ship. |

Fig. E.5. Heave and Pitch RAO comparisons between ANN and BEM for the anchor handling assessment ship. |

Appendix F

Table F.1. Assessment ship 1 (Anchor Handling): MNRE for excitation loads. |

| Heading | 0° | 30° | 60° | 90° | 120° | 150° | 180° | Average | |

|---|---|---|---|---|---|---|---|---|---|

| MNRE [%] | fx | 1.27 | 0.47 | 0.89 | 1.71 | 0.46 | 0.69 | 1.01 | 0.93 |

| fy | 0.01 | 1.58 | 2.19 | 9.36 | 4.21 | 1.61 | 0.01 | 2.71 | |

| fz | 2.88 | 2.37 | 1.37 | 1.29 | 2.72 | 2.34 | 1.36 | 2.05 | |

| mx | 0.01 | 2.67 | 11.59 | 7.50 | 4.02 | 4.06 | 0.01 | 4.27 | |

| my | 5.18 | 6.53 | 2.60 | 2.87 | 4.26 | 3.96 | 2.98 | 4.05 | |

| mz | 0.01 | 0.92 | 1.71 | 0.50 | 1.23 | 2.44 | 0.01 | 0.97 | |

| Average | 1.56 | 2.43 | 3.39 | 3.87 | 2.82 | 2.52 | 0.90 | 2.50 | |

Table F.2. Assessment ship 2 (Landing craft): MNRE for excitation loads. |

| Heading | 0° | 30° | 60° | 90° | 120° | 150° | 180° | Average | |

|---|---|---|---|---|---|---|---|---|---|

| MNRE [%] | fx | 3.43 | 2.57 | 0.51 | 0.27 | 1.63 | 1.04 | 1.54 | 1.57 |

| fy | 0.01 | 0.51 | 1.04 | 1.53 | 2.16 | 1.10 | 0.01 | 0.91 | |

| fz | 1.57 | 1.17 | 2.10 | 1.51 | 2.78 | 1.77 | 4.71 | 2.23 | |

| mx | 0.01 | 2.05 | 6.12 | 11.18 | 1.99 | 2.65 | 0.01 | 3.43 | |

| my | 7.76 | 7.84 | 7.72 | 0.70 | 6.25 | 8.22 | 9.21 | 6.81 | |

| mz | 0.01 | 0.94 | 1.52 | 0.21 | 1.56 | 0.29 | 0.01 | 0.65 | |

| Average | 2.13 | 2.51 | 3.17 | 2.57 | 2.73 | 2.51 | 2.58 | 2.60 | |

Table F.3. Assessment ship 3 (Trawler): MNRE for excitation loads. |

| Heading | 0° | 30° | 60° | 90° | 120° | 150° | 180° | Average | |

|---|---|---|---|---|---|---|---|---|---|

| MNRE [%] | fx | 0.98 | 1.33 | 0.47 | 0.51 | 0.50 | 1.61 | 0.50 | 0.84 |

| fy | 0.01 | 1.72 | 3.32 | 2.19 | 4.52 | 1.20 | 0.01 | 1.85 | |

| fz | 1.36 | 1.19 | 1.98 | 2.00 | 1.83 | 1.99 | 2.02 | 1.77 | |

| mx | 0.01 | 4.58 | 2.76 | 2.54 | 4.16 | 6.27 | 0.01 | 2.90 | |

| my | 5.71 | 4.13 | 2.48 | 1.23 | 1.01 | 1.62 | 1.86 | 2.58 | |

| mz | 0.01 | 0.76 | 0.80 | 1.69 | 0.96 | 0.78 | 0.01 | 0.71 | |

| Average | 1.35 | 2.29 | 1.97 | 1.69 | 2.16 | 2.24 | 0.74 | 1.78 | |

Table F.4. Assessment ship 4 (Yatch): MNRE for excitation loads. |

| Heading | 0° | 30° | 60° | 90° | 120° | 150° | 180° | Average | |

|---|---|---|---|---|---|---|---|---|---|

| MNRE [%] | fx | 0.71 | 0.32 | 0.38 | 0.28 | 0.96 | 0.34 | 0.77 | 0.54 |

| fy | 0.01 | 3.28 | 1.98 | 0.86 | 5.45 | 3.83 | 0.01 | 2.20 | |

| fz | 3.33 | 3.94 | 4.24 | 2.95 | 3.48 | 2.34 | 3.11 | 3.34 | |

| mx | 0.01 | 8.38 | 5.48 | 3.21 | 5.61 | 8.31 | 0.01 | 4.43 | |

| my | 4.24 | 6.29 | 4.66 | 0.74 | 7.14 | 6.35 | 5.79 | 5.03 | |

| mz | 0.01 | 0.72 | 0.36 | 0.61 | 0.53 | 0.52 | 0.01 | 0.39 | |

| Average | 1.38 | 3.82 | 2.85 | 1.44 | 3.86 | 3.62 | 1.62 | 2.66 | |

Table F.5. Assessment ship 5 (Container ship): MNRE for excitation loads. |

| Heading | 0° | 30° | 60° | 90° | 120° | 150° | 180° | Average | |

|---|---|---|---|---|---|---|---|---|---|

| MNRE [%] | fx | 1.74 | 2.29 | 1.53 | 1.43 | 1.84 | 1.85 | 1.62 | 1.76 |

| fy | 0.01 | 1.57 | 1.82 | 1.01 | 2.98 | 1.23 | 0.01 | 1.23 | |

| fz | 2.63 | 3.95 | 1.90 | 2.25 | 1.41 | 1.07 | 2.49 | 2.24 | |

| mx | 0.01 | 8.25 | 16.08 | 14.37 | 6.59 | 4.94 | 0.01 | 7.18 | |

| my | 4.48 | 4.09 | 2.38 | 16.53 | 4.43 | 5.91 | 6.24 | 6.29 | |

| mz | 0.10 | 0.99 | 0.32 | 4.54 | 0.47 | 0.29 | 0.01 | 0.96 | |

| Average | 1.50 | 3.52 | 4.01 | 6.69 | 2.95 | 2.55 | 1.73 | 3.28 | |

Appendix G

Table G.1. Assessment ship 1 (Anchor Handling): MNRE for RAO. |

| Heading | 0° | 30° | 60° | 90° | 120° | 150° | 180° | Average | |

|---|---|---|---|---|---|---|---|---|---|

| MNRE [%] | RAO33 | 3.76 | 3.47 | 3.31 | 2.43 | 3.42 | 2.63 | 1.76 | 2.97 |

| RAO44 | 0.28 | 0.13 | 0.22 | 0.24 | 0.22 | 0.16 | 0.00 | 0.18 | |

| RAO55 | 0.79 | 0.69 | 1.23 | 1.38 | 0.79 | 0.58 | 0.63 | 0.87 | |

| Average | 1.61 | 1.43 | 1.59 | 1.35 | 1.48 | 1.12 | 0.79 | 1.34 | |

Table G.2. Assessment ship 2 (Landing craft): MNRE for RAO. |

| Heading | 0° | 30° | 60° | 90° | 120° | 150° | 180° | Average | |

|---|---|---|---|---|---|---|---|---|---|

| MNRE [%] | RAO33 | 0.96 | 1.90 | 1.37 | 2.36 | 1.58 | 1.07 | 1.59 | 1.55 |

| RAO44 | 0.00 | 0.50 | 1.88 | 2.89 | 1.23 | 0.58 | 0.00 | 1.01 | |

| RAO55 | 4.45 | 4.54 | 2.98 | 2.56 | 3.76 | 5.39 | 6.56 | 4.32 | |

| Average | 1.81 | 2.31 | 2.08 | 2.61 | 2.19 | 2.35 | 2.72 | 2.29 | |

Table G.3. Assessment ship 3 (Trawler): MNRE for RAO. |

| Heading | 0° | 30° | 60° | 90° | 120° | 150° | 180° | Average | |

|---|---|---|---|---|---|---|---|---|---|

| MNRE [%] | RAO33 | 1.05 | 0.67 | 1.72 | 1.90 | 1.15 | 2.27 | 2.07 | 1.55 |

| RAO44 | 0.00 | 0.25 | 0.53 | 1.21 | 0.79 | 0.21 | 0.00 | 0.43 | |

| RAO55 | 1.34 | 2.14 | 3.77 | 5.88 | 2.10 | 0.69 | 1.34 | 2.47 | |

| Average | 0.80 | 1.02 | 2.01 | 3.00 | 1.35 | 1.06 | 1.14 | 1.48 | |

Table G.4 Assessment ship 4 (Yatch): MNRE for RAO. |

| Heading | 0° | 30° | 60° | 90° | 120° | 150° | 180° | Average | |

|---|---|---|---|---|---|---|---|---|---|

| MNRE [%] | RAO33 | 1.41 | 2.57 | 3.47 | 1.64 | 2.76 | 1.86 | 2.40 | 2.30 |

| RAO44 | 0.00 | 0.37 | 0.83 | 1.86 | 1.53 | 0.51 | 0.00 | 0.73 | |

| RAO55 | 5.29 | 4.16 | 12.21 | 8.40 | 6.92 | 6.85 | 5.97 | 7.11 | |

| Average | 2.23 | 2.37 | 5.50 | 3.97 | 3.74 | 3.07 | 2.79 | 3.38 | |

Table G.5. Assessment ship 5 (Container ship): MNRE for RAO. |

| Heading | 0° | 30° | 60° | 90° | 120° | 150° | 180° | Average | |

|---|---|---|---|---|---|---|---|---|---|

| MNRE [%] | RAO33 | 2.69 | 3.81 | 2.92 | 2.85 | 2.13 | 0.88 | 2.68 | 2.57 |

| RAO44 | 0.00 | 0.12 | 0.39 | 0.48 | 0.38 | 0.18 | 0.00 | 0.22 | |

| RAO55 | 1.92 | 1.62 | 1.96 | 6.73 | 1.75 | 2.32 | 2.61 | 2.70 | |

| Average | 1.54 | 1.85 | 1.76 | 3.35 | 1.42 | 1.13 | 1.76 | 1.83 | |