Ocean wave information is valuable for a variety of tasks, including marine operations, maritime transport, and ocean environment monitoring. The X-band marine radar has been widely used for ocean wave remote sensing tasks due to its ability to image the sea surface with high temporal and spatial resolutions. Naaijen et al. [

1] used X-band radar data to estimate wave elevation while combining a wave propagation and vessel response model to predict future vessel motions. Lyzenga et al. [

2] employed X-band Doppler radar data to inverse phase-resolved wave field. Nieto-Borge et al. [

3] used a wave monitoring system to derive sea state parameters and compared the results with buoy data. Al-Ani et al. [

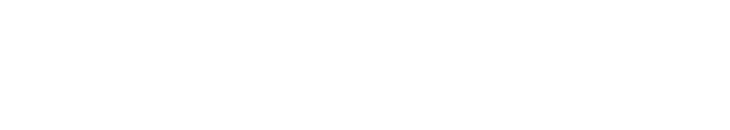

4] successfully applied a short-term deterministic sea wave prediction (DSWP) technique to the field radar data collected at North Atlantic. The radar sea clutter image is generated through the Bragg resonance interaction between the capillary waves and the electromagnetic waves [

5]. It is well-known that gravity waves modulate the backscatter signals through tilt modulation, shadowing modulation, and hydrodynamic modulation [

6], [

7], and therefore the radar image does not measure the surface elevation directly. The traditional spectral analysis method based on the three-dimensional fast Fourier transform is used to extract wave parameters from radar images [

8]. By applying 3D-FFT to a radar image sequence, the image spectrum is estimated, which can then be used to estimate wave spectrum and parameters. Various statistical parameters of the ocean environment can be extracted, such as significant wave height and period, wave direction, sea surface current, and wind speeds [

8], [

9], [

10]. Radar images can also be used for bathymetry mapping [

11]. As an example of practical applications, the Wave Monitoring System (WaMos) is based on the spectral analysis method [

12], [

13]. The statistical parameters provide useful information for relative long-term marine tasks and transport, but they cannot offer quantitative wave information in a specific temporal and spatial location [

14]. Therefore, sea surface reconstruction is required, and there are many challenges for various short-term activities, such as ship motion prediction, vessel positioning, and helicopter landing [

15].