Artificial intelligence (AI) techniques have been widely used in fields such as natural language processing [

11], computer vision [

12], and stock and futures price prediction [

13], [

14]. Deep learning models can be trained to find a mapping relation between the historical and future data of the physical quantity to be forecasted, and then predict the value quickly at one or more future times. This feature of deep learning mitigates the difficulties inherent in real-time wave prediction based on phase-resolved wave models [

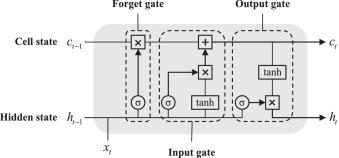

15], particularly in recent years, the rapid development of computer hardware and AI has sparked an active research program on wave prediction based on deep learning. Long-Short-Term Memory (LSTM), Multi Layer Perceptrons (MLP), and Support Vector Regression (SVR) have all been used for forecasting the long-term or short-term characteristics of waves (e.g.,

and

, etc.) or extreme weather [

16], [

17], [

18], [

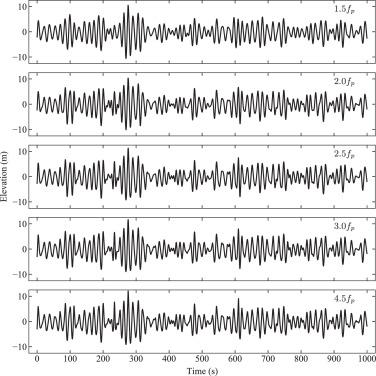

19]. Using the HOS method, Law et al. [

20] simulated 2000 wave time series that corresponded to the sea state off the south coast of Western Australia to train a perceptron model containing only two hidden layers. The model prediction error and inference time were significantly shorter than those of a first-order linear wave model. Duan et al. [

21] proposed and applied the ANN-WP model to predict experimental acquisition wave data. The predicted wave elevation was in general agreement with the experimental measurements in most sea states. Ma et al. [

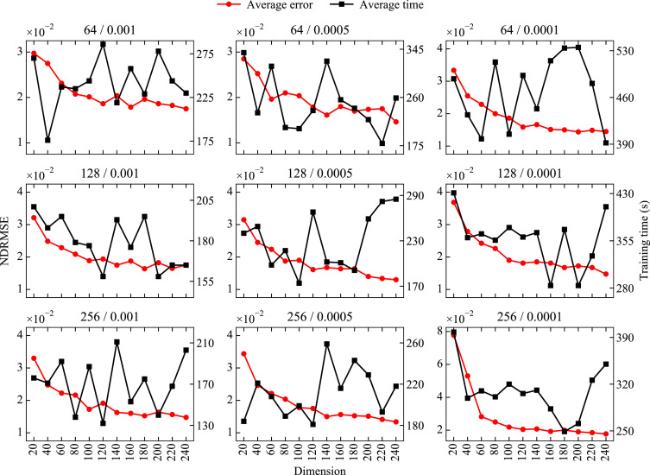

22] examined the influence of three alternative training procedures on the error using the ANN-WP model. They concluded that deep learning models should be trained using a mixture of data from different sea states to ensure model prediction accuracy. As far as the author is aware, the recent deterministic wave prediction models based on deep learning techniques employ a ‘point’ strategy, in which the forecast result at each subsequent time step is a fixed value. However, because wave evolution is a stochastic process impacted by the environment and its components, the wave elevation at each subsequent time point is uncertain. The DeepAR [

23] model, which is based on the ‘probabilistic’ strategy, is used to forecast data with uncertainty (urban traffic and electricity consumption), demonstrating the ability of the ‘probabilistic’ strategy to anticipate uncertainty. More precisely, the prediction of the model is the probability distribution of corresponding physical quantities, and the resampling under this distribution constitutes the final prediction result of the model. Because resampling is a stochastic process, the deep learning models trained by the ‘probabilistic’ strategy can be used to forecast data uncertainty. The ‘probabilistic’ strategy can also provide confidence intervals based on the probability distribution, enhancing the robustness of the model [

23], [

24]. Consequently, this research will attempt to forecast the wave’s short-period time series with a deep learning model based on the ‘probabilistic’ strategy.